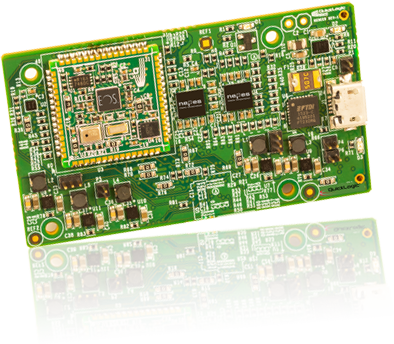

QuickAI™

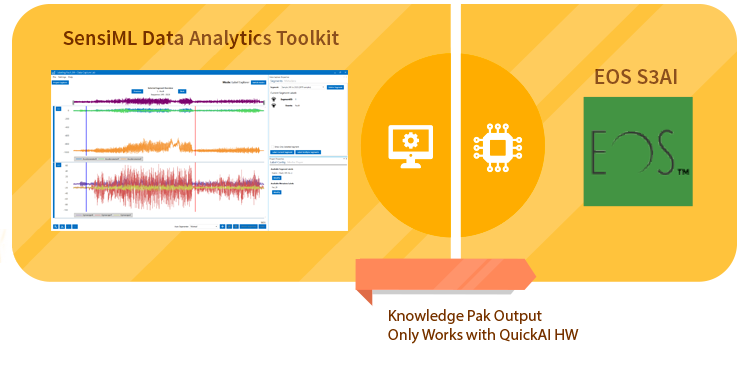

With the advent of Industry 4.0 and the proliferation of IoT, there is a need to make smarter devices that process the sensor data AND make decisions LOCALLY. Traditional cloud-based approaches to designing AI systems make it difficult to migrate AI to endpoint devices due to aggressive battery life, processing capability and environmental constraints. These limitations and requirements call for a different type of artificial intelligence at the endpoint device – one that is zero latency, low power, low cost and easy to train. Security is a non-factor as sensor data never leaves the endpoint device, like in traditional cloud based AI systems.

Download PDF

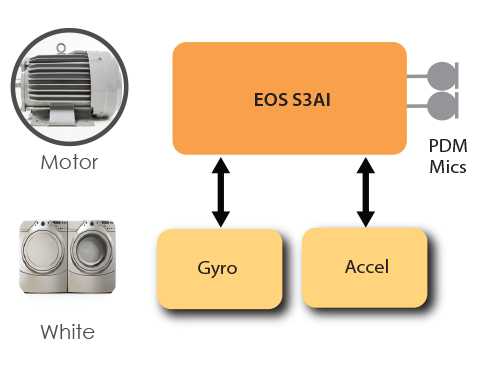

Industrial Predictive Maintenance

Industrial Predictive Maintenance

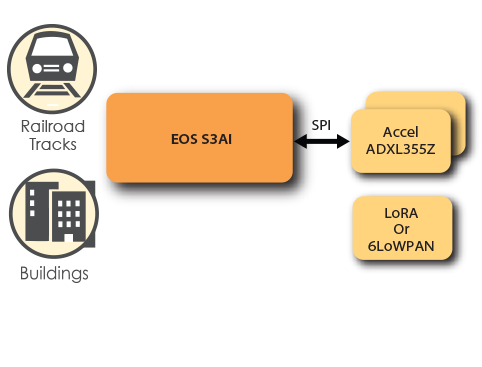

Structural Health Monitoring

Structural Health Monitoring